Overview of the December 2025 AI Regulation Controversy

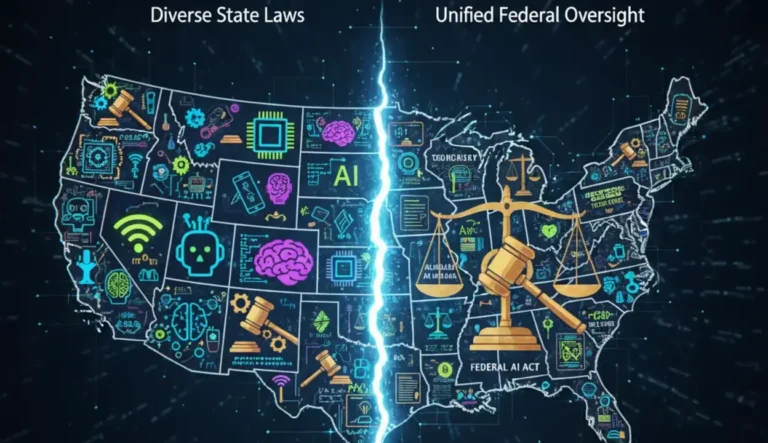

The December 2025 AI regulation controversy centers on whether AI oversight should be unified federally or left to states. President Trump plans an executive order to establish a national standard, preempting 38 state AI laws amid strong opposition from states supporting local regulation.

Key issues include balancing federal and state control, the legality of federal preemption via executive order, and industry concerns over regulatory compliance burdens. The conflict exemplifies federalism challenges in AI governance with global implications.

The debate highlights tensions between innovation facilitation and safety safeguards amid fragmented state laws. It reflects broader concerns about maintaining U.S. AI competitiveness while protecting public interests from AI risks.

Timeline of Key Events Leading to Trump’s Executive Order

In late 2023, Biden issued an AI oversight EO promoting safety and monitoring. Early 2025, Trump rescinded this and issued an EO removing barriers to AI innovation, reducing oversight.

Throughout 2025, Trump circulated drafts to preempt state AI laws and formed a DOJ task force to challenge such laws. In December, he promised an executive order to block state AI regulations, emphasizing federal control.

Stakeholder Positions: Senator Gounardes, Assembly Member Bores, and Trump Administration

Senator Gounardes and Assembly Member Bores support strong state AI regulation via the RAISE Act, requiring safety plans, incident reporting, and penalties to ensure responsible AI deployment.

The Trump administration opposes state AI laws like the RAISE Act, seeking to ban them in favor of unified federal oversight, prompting criticism from Gounardes and Bores who see state safeguards as vital.

The «One Rule» Executive Order and Its Goals

The \»One Rule\» executive order aims to create a single federal AI regulatory framework, preempting conflicting state laws to streamline innovation and compliance for AI companies nationwide.

It seeks to prevent states from issuing divergent AI mandates, reduce regulatory burdens, and maintain U.S. AI leadership by centralizing authority while addressing federal funding and enforcement mechanisms.

The Role of State-Level AI Safeguards and Federal Ban Risks

State laws play a crucial role in AI risk mitigation by creating tailored, responsive regulatory frameworks that address specific regional concerns and promote public safety.

States often enact AI safeguards faster than the federal government, requiring transparency, accountability, risk assessments, and sector-specific rules that protect citizens from AI harms.

These laws complement federal efforts by providing localized innovation and policy experimentation, ensuring protection amid gaps in national AI regulations.

Importance of State Laws in AI Risk Mitigation

States like Colorado and Utah impose transparency and anti-discrimination requirements for high-risk AI uses, enabling rapid, context-specific regulation ahead of federal action.

They mandate risk assessments and disclosure, helping consumers understand AI involvement, while tailoring rules to sectors such as insurance and mental health to address unique needs.

Political Leverage of BEAD Broadband Funding

The BEAD program’s federal funds for broadband infrastructure provide political leverage, as states may face pressure to align with federal AI policies in exchange for funding access.

This leverage could enforce compliance with federal AI regulatory priorities by conditioning financial support on freezing or preempting state AI safeguards.

Overview of BEAD Program and Its Broadband Infrastructure Role

The Broadband Equity, Access, and Deployment (BEAD) program invests $42.45 billion to expand high-speed broadband to unserved and underserved areas nationwide.

States develop plans to deploy fiber optic infrastructure, aiming to close the digital divide and enable economic growth, education, and social equity in marginalized communities.

Implications of Federal Ban on State AI Regulations

A federal ban on state AI rules centralizes oversight but risks leaving regulatory gaps, as federal standards remain underdeveloped while states can’t address local AI harms.

It limits states’ ability to protect residents from risks like algorithmic bias, election interference, and undermines democratic accountability and public trust in AI governance.

Rationale and Legal Foundation of the Federal AI Regulation Ban

The Trump administration aims to prevent a patchwork of state AI rules by centralizing oversight at the federal level to promote uniformity. It seeks to reduce regulatory burdens to accelerate AI innovation and limit duplicative state laws complicating compliance nationwide. The strategy involves discouraging states from adopting divergent AI regulations by linking federal funding to regulatory alignment. This approach favors a streamlined federal framework to strengthen U.S. AI leadership.

Legal debates focus on whether the executive branch can preempt state AI laws without explicit congressional authorization, as typically federal preemption requires legislation. Executive orders alone lack clear constitutional grounding to override states. The administration has tasked the Department of Justice with litigating against state AI laws and uses federal agencies to argue for federal dominance, raising questions about the limits of executive power in AI rulemaking.

Challenges arise under the Dormant Commerce Clause, which restricts states from regulating interstate commerce in ways that unfairly burden out-of-state interests, potentially invalidating many state AI laws. Additionally, First Amendment concerns emerge when AI regulations implicate speech, complicating the scope of lawful restrictions. These constitutional constraints impact the federal government’s ability to impose a broad ban on state AI safeguards through executive action alone.

Trump Administration’s Goals to Avoid Regulatory Patchwork

President Trump’s administration seeks to eliminate diverse state AI regulations to establish a single federal standard, preventing inconsistent oversight. This uniformity aims to ease compliance burdens on AI developers and accelerate innovation nationwide. The approach conditions federal funding on states adopting favorable, less restrictive AI policies, exerting indirect pressure against additional local rules.

Key policy tools include regulatory sandboxes to test AI technologies under federal guidance and revising enforcement actions that may hinder AI development. Agencies are encouraged to review and repeal policies deemed overly restrictive. Collectively, these efforts centralize AI governance while fostering a flexible, innovation-friendly environment minimizing regulatory duplication.

Constitutional and Legal Debates Around Federal Preemption

Federal preemption of state AI laws, grounded in the Supremacy Clause, typically requires congressional action; executive orders alone do not hold clear authority to override state statutes. Legal scholars highlight that without legislation, preemption claims are vulnerable to judicial challenge. The administration’s executive directives create a DOJ litigation task force to challenge state laws but may face constitutional limits.

Federal agencies have explored asserting that some state AI regulations conflict with federal statutes like the FTC Act, complicating the preemption landscape. Congress itself has seen divided efforts on imposing preemption, reflecting political and legal uncertainty. The debate underscores the tension between federal leadership in AI policy and respecting state sovereignty in regulation.

Challenges Related to Dormant Commerce Clause and First Amendment

The Dormant Commerce Clause restricts states from enacting AI regulations that unduly burden or control interstate commerce, risking invalidation of many local AI laws. Courts apply tests balancing these burdens against state interests, with concerns about extraterritorial application of state rules affecting AI systems operating beyond borders.

First Amendment challenges arise where AI regulations impact speech or content, limiting governmental authority to restrict AI-generated expression. This intersection complicates regulatory efforts by imposing constitutional safeguards on lawmaking. Combined, these challenges reveal the complex constitutional terrain facing federal bans on state AI safeguards.

Congressional Responses and Political Dynamics

Congress shows strong bipartisan resistance against federal bans on state AI regulations. A coalition of 36 state attorneys general opposes such preemption efforts.

Attempts to insert AI preemption language into key bills have failed due to bipartisan pushback, reflecting congressional reluctance to limit state regulatory authority.

Public opinion supports this stance, with 57% of Americans opposing federal laws blocking states from regulating AI across party lines.

Bipartisan Resistance to AI Regulation Moratoriums in Congress

Bipartisan lawmakers emphasize states need flexibility to address fast-evolving AI risks. They warn federal preemption risks ceding control to large tech firms.

Legislative setbacks for proposed federal bans demonstrate strong congressional objections to moratoriums preventing states from enacting AI safeguards.

Political Struggle Among Government, Big Tech, and States on AI Oversight

The federal government advocates for uniform AI standards and cautious private sector regulation, while states pursue diverse local AI laws, causing tension.

Former President Trump’s promise of a \»one rule\» executive order to preempt state AI regulations intensifies political conflicts over AI governance roles.

Future Scenarios for U.S. AI Regulation

The U.S. AI regulatory future may feature a fragmented patchwork led by states, as federal harmonization lags amidst political gridlock and varied local laws.

Federal reassertion could occur with sector-specific rules or a new agency, especially after major AI incidents demanding unified national standards.

Likely, a hybrid federal-state model will emerge, balancing federal baseline standards with state control of local AI applications and technical guidance by agencies.