Core AI Technologies in Autonomous Vehicles

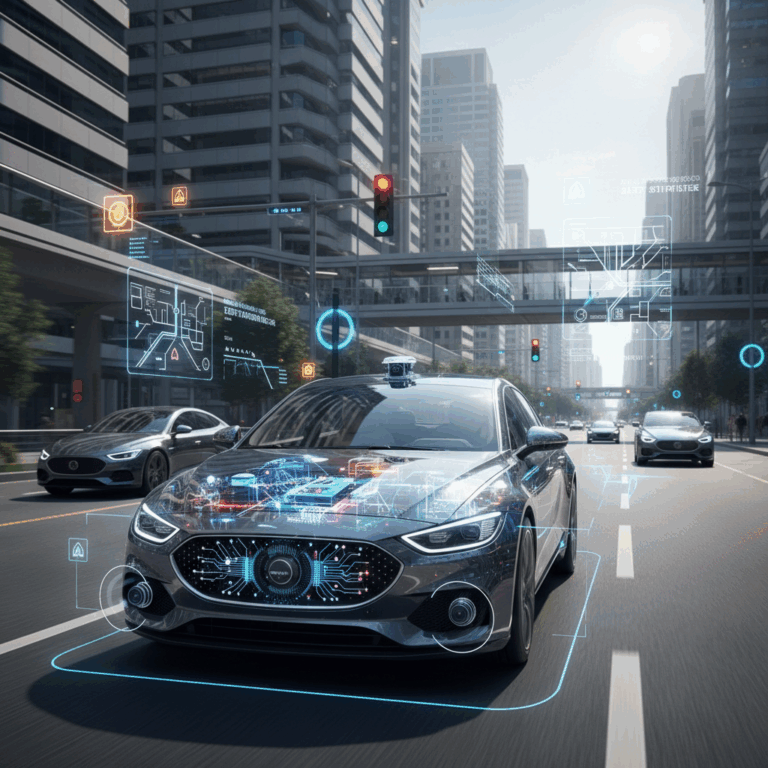

Autonomous vehicles depend heavily on advanced AI technologies to navigate and interact with complex driving environments. These technologies interpret sensor data and execute decisions without human input.

The integration of various sensor systems and intelligent algorithms enables vehicles to perceive surroundings, detect obstacles, and predict future movements, ensuring safe and efficient autonomous driving.

This synergy of hardware and software forms the backbone of modern self-driving car technology, continuously evolving as research and innovations progress.

Sensor Technologies and Data Collection

Sensors such as LiDAR, radar, cameras, ultrasonic sensors, and GPS collect diverse data types critical for understanding the vehicle’s environment. Each sensor provides unique information like distance, speed, or object appearance.

Data collection is vital for building an accurate and detailed 3D map of the surroundings, enabling the vehicle to recognize lanes, pedestrians, other vehicles, and obstacles in real time.

Combining input from multiple sensors ensures redundancy and enhances reliability, allowing autonomous systems to operate safely even in challenging conditions like bad weather or poor lighting.

AI Algorithms for Environment Perception

AI algorithms process the vast sensor data to interpret and classify objects, detect road features, and understand complex scenarios. Techniques like computer vision and machine learning are crucial for these tasks.

These algorithms generate predictions about the future behavior of other road users, enabling the vehicle to anticipate movements and adjust its own actions proactively for safety.

Deep learning models such as convolutional neural networks (CNNs) are widely used for object recognition, while recurrent neural networks (RNNs) help in sequencing decisions based on dynamic driving contexts.

Key Components of Autonomous Vehicle AI Systems

The AI systems in autonomous vehicles consist of several essential components that work together to perceive and navigate complex environments. These components enable vehicles to understand surroundings and make safe decisions.

Core aspects include computer vision for detecting and recognizing objects, sensor fusion to merge data from multiple sources, and deep learning models that predict future events and guide decision-making processes.

These key components ensure autonomous vehicles operate with high accuracy, adapting continuously to dynamic traffic conditions, enhancing safety and efficiency on the road.

Computer Vision and Object Recognition

Computer vision uses AI to interpret images from cameras, enabling vehicles to identify road signs, pedestrians, vehicles, and lane markings in real time. This visual understanding is crucial for safe navigation.

Advanced techniques process vast amounts of image data to distinguish objects clearly, even under varying light and weather conditions. Object recognition allows vehicles to react appropriately to different traffic scenarios.

The combination of real-time detection and classification helps the system maintain situational awareness, a foundational element for autonomous driving reliability and safety.

Sensor Fusion Techniques

Sensor fusion integrates data from multiple sensors like LiDAR, radar, and cameras to create a comprehensive and accurate environmental model. This synergy overcomes the limitations of any single sensor.

By merging sensory inputs, the system can better detect obstacles, estimate distances, and understand the context around the vehicle. This unified perception is essential for handling complex driving situations.

Sensor fusion improves robustness against sensor errors, enhancing overall system reliability and enabling autonomous cars to perform safely in changing or adverse conditions.

Deep Learning Models for Prediction and Decision-Making

Deep learning models such as CNNs and RNNs analyze sensor data to predict the future actions of other road users and decide the vehicle’s responses accordingly. These models learn patterns from vast driving data.

They support dynamic decision-making by forecasting trajectories and evaluating safe maneuvers like lane changes, braking, and acceleration. This capability is vital for smooth and adaptive driving.

Advanced Neural Networks in Autonomous Driving

Recurrent neural networks (RNNs) excel in handling sequences of data, making them valuable for understanding traffic flow and predicting movements over time, while convolutional neural networks (CNNs) specialize in visual data interpretation.

Sensors and Data Processing Innovations

Innovations in sensor technology and data processing significantly enhance the capabilities of autonomous vehicles. The development of high-resolution LiDAR has revolutionized environmental mapping by providing detailed, real-time 3D scans.

These advancements enable more precise detection of objects and obstacles at great distances, improving the vehicle’s ability to navigate safely and efficiently. Such innovations are critical for robust autonomous driving.

Combining cutting-edge sensors with sophisticated AI algorithms allows vehicles to transform raw sensor data into actionable insights, ensuring optimal performance even in complex and dynamic driving conditions.

High-Resolution LiDAR and Environmental Mapping

High-resolution LiDAR systems generate extremely detailed 3D maps by emitting laser beams that measure distances with high accuracy. This technology captures the contours and shapes of surrounding objects, facilitating precise environment understanding.

These detailed point clouds allow autonomous vehicles to identify static and dynamic obstacles, helping the AI systems plan safe and efficient routes. High-resolution data improves detection of small or distant objects that other sensors might miss.

Integration with AI-driven analysis enables the vehicle to interpret LiDAR data in real time, supporting responsive decision-making. This capacity is crucial for timely reactions to unexpected changes in the driving environment, enhancing safety and reliability.

Operational Advantages and Industry Progress

Autonomous vehicles offer significant operational advantages by leveraging AI for real-time decision-making, enhancing safety, and optimizing routes. Their ability to adapt quickly benefits diverse driving environments.

Industry progress continues rapidly, with advancements focusing on improving system reliability, integrating connectivity technologies, and addressing regulatory challenges to enable widespread adoption.

These developments bring self-driving cars closer to becoming a common reality, promising reduced traffic accidents, increased mobility access, and more efficient transportation networks.

Real-Time Path Planning and Control

Real-time path planning allows autonomous vehicles to continuously update their routes based on current road conditions, obstacles, and traffic predictions. This ensures smooth and safe navigation in dynamic settings.

AI algorithms analyze sensor inputs instantly to calculate optimal maneuvers such as accelerating, braking, or lane changes, maintaining safety while improving travel efficiency and passenger comfort.

The precise control mechanisms adjust steering, speed, and braking fluidly, enabling the vehicle to react faster than human drivers to unexpected events, reducing collision risks and traffic disruptions.

IoT Integration and Connectivity Enhancements

IoT integration connects autonomous vehicles to other cars, road infrastructure, and cloud services, enabling real-time data exchange that improves situational awareness and decision-making.

Enhanced connectivity supports cooperative driving, where vehicles share information about hazards or traffic flow, increasing safety and enabling coordinated maneuvers in complex environments.

The combination of AI with IoT also facilitates remote monitoring, predictive maintenance, and software updates, ensuring vehicles operate optimally and securely throughout their lifecycle.