Fundamentals of Neural Networks

Neural networks are computational systems inspired by the human brain, designed to learn from data and make decisions without explicit programming.

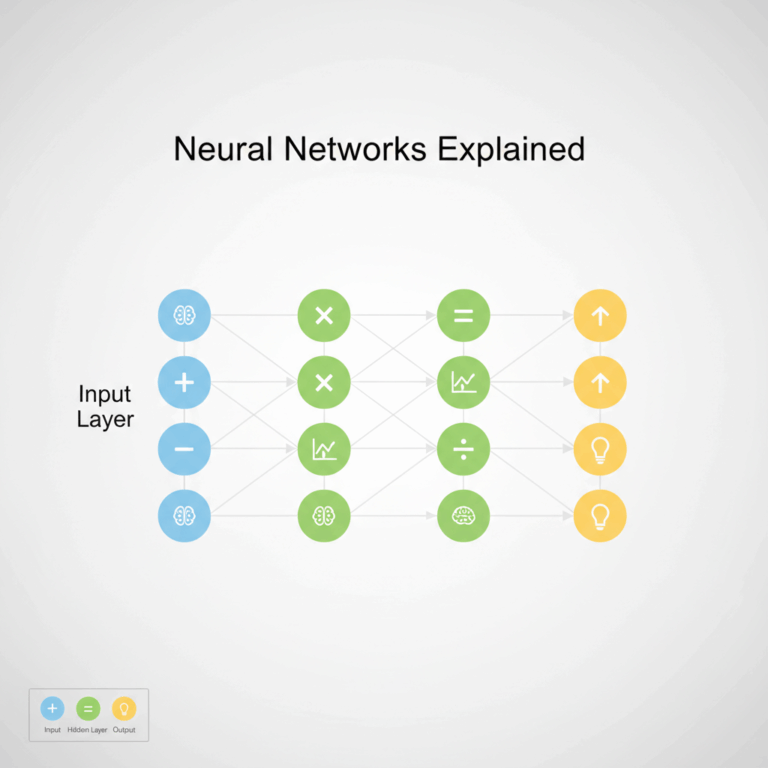

They consist of interconnected nodes called neurons, organized in layers, which process data step-by-step to extract meaningful patterns and insights.

Structure and Components

A neural network is structured with layers: the input layer, multiple hidden layers, and an output layer. Each layer contains several neurons.

The input layer receives raw data like images or numbers. Hidden layers transform this data through weighted connections and activation functions.

The output layer produces the final result, such as a classification or prediction, based on processed information from previous layers.

Role of Neurons and Layers

Neurons act as processing units that sum incoming signals and apply weights to emphasize important features in the data.

Activation functions within neurons introduce non-linearity, enabling neural networks to capture complex, real-world patterns beyond simple linear models.

Layers work sequentially, with each neuron’s output becoming input for the next layer, allowing deep hierarchical feature extraction and learning.

Data Processing in Neural Networks

Neural networks process data through a series of layers, starting from the input and moving through internal transformations in hidden layers before reaching the output.

This layered processing enables the network to learn complex features by adjusting connections based on the data it receives and the task at hand.

Input, Hidden, and Output Layers

The input layer accepts raw data such as images, text, or numerical values, presenting them as signals to the network.

Hidden layers process these inputs by applying weights and biases across neurons, progressively extracting higher-level features.

The output layer aggregates the final transformed information to generate predictions, classifications, or decisions.

Activation Functions and Their Importance

Activation functions introduce crucial non-linearity into the network, allowing it to model complex relationships within data.

Common functions like sigmoid, ReLU, and tanh transform neuron outputs so networks can handle diverse tasks and improve learning.

Without activation functions, a neural network would behave like a simple linear model, limiting its ability to solve intricate problems.

Feedforward Mechanism

In the feedforward process, data moves forward through the network, from inputs through hidden layers to outputs without looping back.

Each neuron receives weighted inputs, applies an activation function, and forwards the result to the next layer’s neurons.

This stepwise propagation ensures that information flows continuously, enabling efficient transformation of inputs into meaningful outputs.

Training and Learning Mechanisms

Training neural networks involves adjusting the weights and biases that control how input data transforms as it passes through the network.

By optimizing these parameters, the model progressively improves its accuracy in predicting or classifying new data based on learned patterns.

Weight Adjustment and Bias

Weights represent the strength of connections between neurons and indicate the importance of each input in determining the output.

Biases are additional parameters added to neurons to shift activation functions, helping the network fit data more flexibly.

During training, weights and biases are updated iteratively to minimize the difference between predicted and actual outcomes.

Backpropagation and Gradient Descent

Backpropagation is an algorithm used to calculate the error gradient with respect to the network’s weights, working backward from outputs to inputs.

This process identifies how each weight contributes to the error, enabling targeted adjustments to improve performance.

Gradient descent uses these gradients to update weights by moving them in the direction that reduces the prediction error most efficiently.

Applications and Capabilities

Neural networks excel at recognizing complex patterns by learning from vast amounts of data, making them invaluable in modern technology.

They can identify subtle relationships in data that traditional algorithms might miss, enabling advanced capabilities in various fields.

Complex Pattern Recognition

Neural networks detect intricate features in images, sounds, and text by leveraging multiple layers that extract progressively abstract representations.

This ability allows tasks like facial recognition, speech understanding, and handwriting analysis to reach high accuracy levels.

By modeling complex dependencies, neural networks excel at identifying patterns even when data is noisy or incomplete.

Real-world Problem Solving

Neural networks solve practical problems in industries such as healthcare, finance, and autonomous systems by interpreting diverse data types.

Their learning capability enables applications like medical diagnosis, fraud detection, and self-driving cars, improving effectiveness and automation.

Overall, neural networks’ flexibility and learning power contribute significantly to cutting-edge innovations impacting daily life.